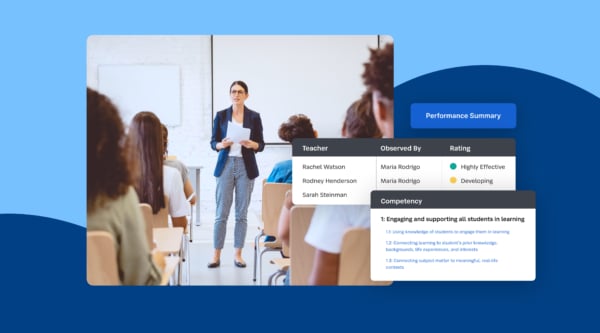

A Unified Future: Transforming Student Outcomes

read more

Discover the keys to unlocking success in K-12 education with insights from the 2024 Family Survey.

News, articles, and tips for meeting your district’s goals—delivered to your inbox.